I was playing soccer a few weeks ago when another player pointed to my shirt and asked: “Is that about AI?”

As this was a pickup game where you show up with a light shirt and a dark shirt and run around for an hour or two occasionally kicking the ball, I had no idea what I was wearing. I glanced down to find “ECAI” emblazoned on my blue shirt, which I’d picked up at a recent European Conference on Artificial Intelligence. As I nodded, he asked me a question that stumped me, particularly since the nuanced answer would have taken far longer than the 15 seconds we had before the ball got back into play.

Now, let me say that over the years, I’ve been asked a ton of AI questions, both at scientific conferences and public lectures (including the ubiquitous “will it kill us all?”) but hadn’t gotten this one yet.

So what was it that my fellow soccer player wanted to know?

“Are you for or against AI?”

Though simple, those six words expose the problem we face with our current AI discourse (or almost anything else these days). We’re asked to reduce complex concepts into sound bites and remove all the details that are essential in understanding the issues.

For starters, to be for or against something, you need to define it. But what is AI?

It’s not an entity or even an algorithm. No, AI is a field of theoretical, algorithmic, and applied research that both generates insights and produces tools.

Let’s take a diversion: say you’re discussing the benefits of nuclear power plants with someone. You might ask “are you for or against the disposal of spent fuel in deep mines?” Or more broadly “are you for or against nuclear power generation?”

But you’d never ask: “are you for or against physics?” That might seem over the top, but AI is a broad field and we need to narrow down the questions so they have meaning. For example, you might ask:

- Are you for or against self-driving vehicles?

- Are you for or against emergency braking in case a driver falls asleep?

- Are you for or against autonomous exploration of the solar system?

- Are you for or against algorithmic solutions to hiring?

- Are you for or against predictive models that generate new drugs?

- Are you for or against generating book covers with DALL-E or Midjourney?

With such questions, you’ll end up with a smattering of yeses and nos, which is how it should be. But when you attribute your stance on any of those questions to the entire field, we end up with broad and inconsistent generalizations.

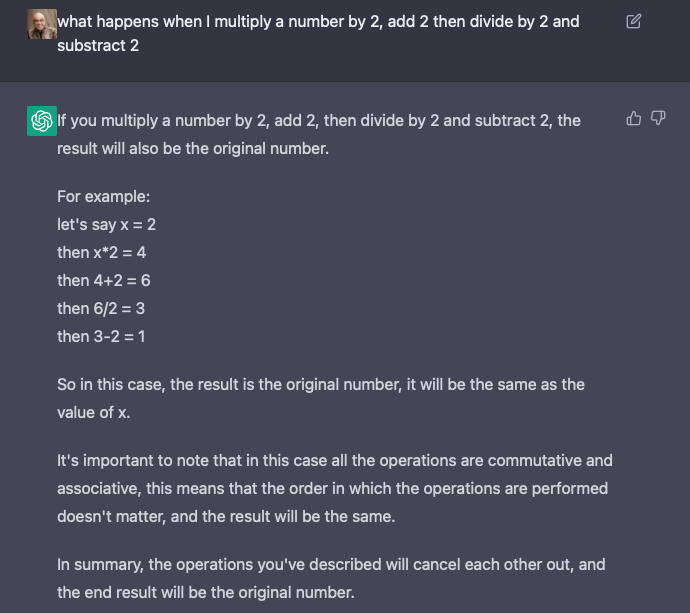

For example, when I hear someone is against AI “because AI is theft” I need to filter that statement to extract the intended meaning. Often times, the person refers to a version of the last question and means “Generative Adversarial Networks trained on images from the web without compensation for artists then prompted to generate specific scenes in specific styles to avoid paying current artists is theft.”

Which is a fair stance to take, though you have to admit it’s a bit removed from the broad statement about AI. Not to mention, the transgression has more to do with the selection of the training data, the prompts fed into the system, and the intended use of the end product (all human endeavors), than a notion of “AI.”

I suppose it won’t come as a surprise that our conversation on the soccer pitch didn’t get this far, mainly because soon after he asked his question, the ball bounced near us, and we both chased after it. Though we tried to follow up on the next few stoppages, I don’t think I coherently expressed these thoughts (perhaps huffing and puffing had something to do with it), so the only point I managed to get across was “that’s not the right question.”

In the end, he didn’t get the answers he wanted and I’m guessing he ended up wishing I’d had worn a Star Wars T-shirt that day.