Well, let’s dive right in: ChatGPT (which is based on GPT-3, which is based on GPT-2 and so on) is a chatbot from OpenAI that can interact with users and hold a conversation quite naturally. You can try it for yourself at chat.openai.com.

Now, because it responds well to generic questions and queries, there’s been some concern about the impact chatGPT will have on writing (from novels to scripts to articles to essays). But first, let’s talk a little about how these algorithms work. (I will simplify a fair bit, so if you work on natural language processing, please forgive my transgressions; or yell at me, that works too.)

Fundamentally, ChatGPT is based on predicting the next word in a sequence, which is the basis of most, if not all, language algorithms. For example, if you had to complete a four-word sentence that started with “I NEED TO …” you’d have quite a few verbs to choose from. If the sentence had started with “I SAW A …” you’d guess a noun. But in either case, there are thousands of ways to complete those short sentences that would all be (somewhat) correct.

So if you had only three words to guess the next word, it would be difficult to generate coherent language as there isn’t enough context to narrow down the selection.

But what if you had to complete a sentence that started with “I HAD A LONG DAY, SO I TOOK A HOT SHOWER AND NOW I’M LYING IN …” ?

The odds of picking BED are very high, right? That’s one of the main reasons ChatGPT is so much better at generating text than your phone: it uses a much longer sequence of words to predict the next word!

Now you might ask, how would you train for all possible twenty word combinations? Aren’t there too many combinations? Well, there are, but guess what, there is also a lot of text on the internet, which is how GPT was trained.

This said, GPT also uses clever use of algorithmic tricks to select certain words in a sequence as more important than others for the prediction. For example, in the long sentence above “Long day” and “lying in” are more closely linked to “bed” than “so” or “I” or “a” are.

BTW, GPT stands for Generative Pre-trained Transformer. Generative refers to the word prediction part we discussed above. Pre-trained means the probability of any words in a particular sequence has been determined (using text on the internet as a guide), though it can still be fine-tuned to context. Transformer is the term for the structure of the algorithm that determines how important a word is on predicting other words of the sequence (that is, what part of the sentence we should focus our attention on).

So GPT is a cool algorithm, but notice that while describing GPT, I never stated that it understands language, which is a claim that arises frequently. I’m willing to agree that GPT understands grammar because it has seen so many sentences, it has statistically learned the structure of language. I’m much less convinced we can use understand to describe the concepts ChatGPT speaks about with authority.

So, yes, ChatGPT can generate coherent text, particularly when there is no objective truth to what needs to be said. Right now, with the right prompts, I’d bet it can do as well as most editorials, and certainly better than the letters to the editor on most publications. I’d say recommendation letters would be fair game (and quite convincing with the right prompts). It would do a solid job for personal statements (college essays), perhaps not as eloquent as the best, but probably better than the average one.

Ironically for an AI algorithm, it is fairly poor at math. We’ve been trained over many decades of science fiction and real world success (chess, Go) that AI algorithms would surpass us in math/logic, but not in writing or art. (The argument on whether an algorithm can generate art is a whole other topic; as a writer and AI researcher, I have pretty strong opinions on that one, but that’s a topic for another post.)

Now, I don’t want you to take my word that chatGPT is poor at math, so let’s look at two answers to simple math questions:

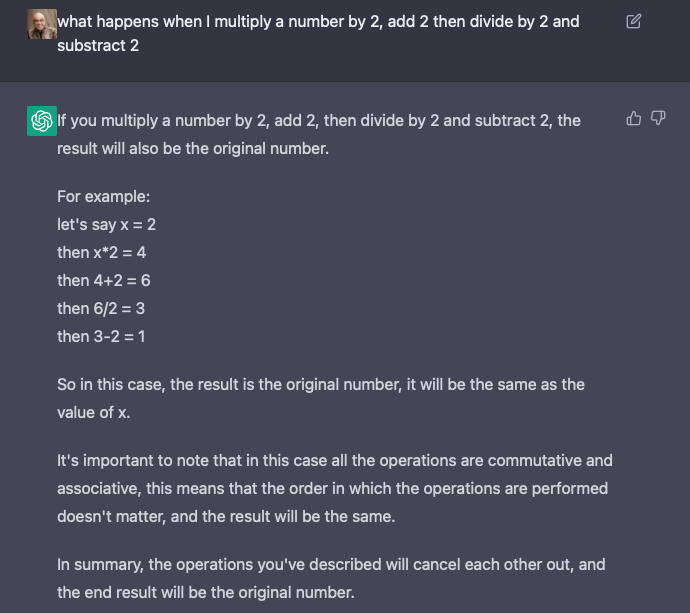

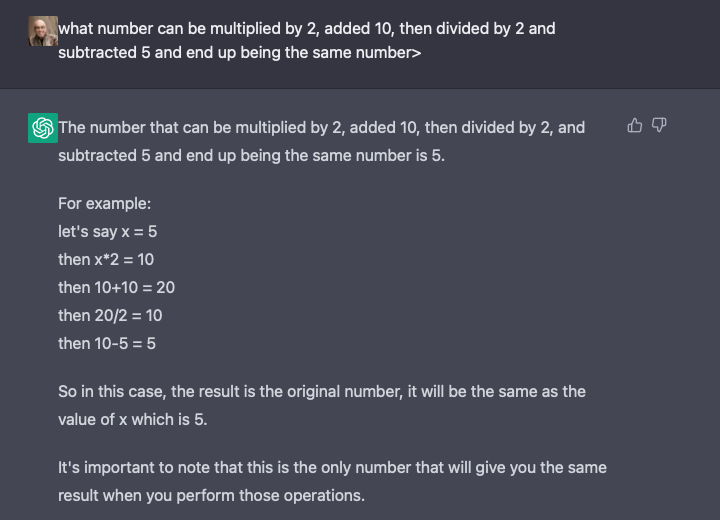

Example 1: I asked ChatGPT to do simple arithmetic:

There are three things that are disturbing about chatGPT’s answer:

1- It’s wrong. Remember, this is described as a “sophisticated” algorithm trained with the “knowledge of the internet” and so on. Very few will entertain the notion that its answers might be wrong.

2- To justify its answer, ChatGPT works out an example that ends up NOT showing what it claimed (unless I missed the memo that 2 equals 1), but it doubles down on its incorrect conclusion. This means it is not connecting its answer to its example, which means it does not understand (that word again) what it is saying.

3- To sound authoritative, ChatGPT ends with a note that is complete nonsense (a word soup that mixes concepts) but does so in the clarifying tone of an all-knowing teacher.

So the problem isn’t just that the answer is wrong; it’s that unless you’re paying attention, the tone and subtle details will lull you into accepting the statements as correct.

Example 2: I asked ChatGPT a more subtle question that was somewhat ambiguous:

The answer starts fine (5 works, but so does any other number). The example is correct, so all is good. But then, ChatGPT ends with a note that claims 5 is the only number with this property. In fact, every number has this property!

So again, the tone and the “it’s important to note…” wording implies in-depth knowledge it does not have.

The issue here isn’t that ChatGPT made arithmetic errors. It’s the complete disconnect between its abilities and its tone.

So, with good text generation, limited context, and potentially incorrect answers, what should we make of ChatGPT? I’d say it’s an intriguing tool that can generate coherent and correct open-ended text. For example, when I gave ChatGPT the following prompt:

Can you write a recommendation letter for a student I’ve known for 15 months? Emphasize three key attributes: hard work, intelligence, and team work.

I ended up with a well-structured but generic letter, though not a terrible one. Then I asked chatGPT to write a scene with the following prompt:

Write a scene where our hero hides in the basement of a restaurant while she’s pursued by the military. She picks the lock to get in and hides below a metal counter behind sacks of rice. Later, when the military pass, the owner spots her, and though the owner isn’t happy, she decides to help the hero. Also, write the dialog between the owner and our hero.

The two-page output wasn’t horrible, but it was cliched, repetitive, and the dialog was awful. Okay, it was pretty horrible. But was it fixable? I’d say not with what I gave it to work with. (BTW, there is such a scene in my current draft, and let’s just say I will stick to my version.)

I don’t want to end on a downer. ChatGPT is a tremendous achievement and its overall grasp of language is state of the art. But it is a language generation algorithm, nothing more and nothing less. We need to be careful of hyperbole, and be careful to not attribute intention, knowledge, or even understanding to its answers.

So I’ll leave you with this: the text examples I tried showed me that what we end up with depends greatly on the clarity and specificity of our prompts. So we’re opening a new field of specialization: prompt giving. The prompts have to be precise and descriptive to provide unique text, but broad enough to capture the general feel of the topic. For those who’ve read Purged Soulsyou can think of these clever prompts as first-generation sniffers. 🙂

Update (February 21, 2023): Turns out ChatGPT’s relationship with accuracy and objective truth is worst than I’d initially thought. Here’s an interesting exploration of ChatGPT’s ability to recommend a particular style of book by Tema Frank, a historical fiction author and speaker. Asked to recommend books set in a specific time period, ChatGPT starts fine, makes mistakes, then invents books and attributes them to well-known authors. It’s a fascinating read that further exposes ChatGPT’s lack of subject matter understanding!

Caveat: I’d like to add a word of caution here to avoid anthropomorphizing ChatGPT. I don’t believe its brash discourse reflects the values or the personality of its Silicon Valley inventors. That assumes ChatGPT has values or a personality or even that it has been trained to be self-assured, which isn’t accurate. Similarly, I wouldn’t describe ChatGPT’s behavior as the Dunning-Kruger effect because that implies it overestimates its abilities. I’d argue that’s also not possible since it doesn’t have any notion of its abilities (although if you ask it, it will make something up).

I think the truth is simpler: ChatGPT doesn’t understand what an author or a book is, or what a place or time is (the same way it didn’t understand that 2 did not equal 1); it simply strings statistically likely words together, and if that means occasionally attributing non-existing books to famous authors, well … that’s just the laws of statistics!