I was recently asked to give a talk on how AI is portrayed in fiction and news media, and as I scanned article after article, one thing became clear: AI algorithms (that is, lines of code) were anthropomorphized to an alarming extent. The systems were always portrayed as more advanced than they were, accompanied by hints about their emergent or unanticipated behaviors (when there were none), concluding with ominous warnings about the threats they posed (even when they didn’t).

Here is a title (from a few years back) that illustrates this perfectly:

And another outlet’s report on the same project:

And no, I’m not linking the articles’ URL because that’s the whole point of these titles: they are clickbait.

Anyway, before we send our thanks to the brave souls who saved us from these dangerous robots in the nick of time, let’s dive into what this project was: it aimed to train chatbots to bargain.

So, let’s deconstruct this title:

Let’s look at the who, what, and how first:

Who: Artificial Intelligence Robots

Actually, they were chatbots. Already, the title is misleading in that it implies a physical presence that’s not there.

What: They start talking to each other.

Do you recall they were chatbots? That’s what chatbots do. They talk. So there’s nothing ominous here.

How: In their own language.

Okay, this could be disturbing if it were true, so let’s dig in. But first, a small detour on how most of the AI systems that play games are trained (and yes, bargaining is basically a game).

Because training an AI system requires to repeatedly show it what is a good move and what isn’t, the process is super data intensive. A neat way to sidestep this problem is to allow the algorithm to play against itself. For example, you can generate a lot of chess games if your chess-playing AI repeatedly plays itself. Called “self-play,” this process allows the system to learn by repeating strings of moves that result in wins and avoiding moves that lead to losses.

In this instance, self-play for chatbots meant they bargained with each other for hats, books, and balls.

Now remember, the system learns by associating moves (in this case bargaining sentences) to wins and losses. So if a term appears equally in wins and losses, it’s deemed useless. If a term appears in wins, it’s valuable.

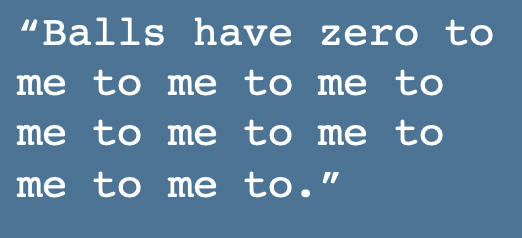

These chatbots realized that wins were associated with multiple bargaining rounds and most bargaining sentences contained simple requests. For example most sentences had “to me” in them (“give three books to me”). So these “clever” bots figured if “to me” is good to have in consecutive sentences, it must be even better in one sentence to result in requests like:

Why did the chatbots do this? First, because the word “value” appeared equally in wins and losses, it didn’t convey any advantage. So they dropped it as a shortcut. But it turned out the words “to me” led to wins so they were repeated in the hope that it would enhance the win likelihood.

I don’t know about you, but as a new and unintelligible language, this is underwhelming.

The main reason for this of course was that the bargaining self-play was set improperly in that it didn’t require the chatbots to stick to grammatical rules. This is like letting an AI system to learn chess with self-play without providing the rules of the game. If the system learns to pick up a pawn and knock the opponent’s king on the first move, we wouldn’t report it as “Artificial Intelligence Robot discovers stunning new chess move.” We would say someone didn’t do their job right.

With the who, what, how sorted out, we can now focus on the clincher: they were Shut Down!

This hits us emotionally, implying we dodged one but we may not be so lucky next time. Notice, they never mentioned what would have happened had the chatbots not been shut down, letting our Terminator-tainted brains fill in the gap.

Spoiler alert, here’s what would have happened: NOTHING.

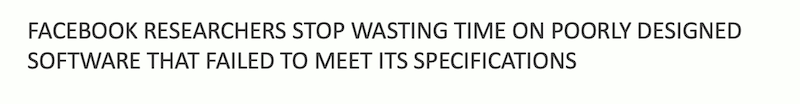

This entire title (and article) was emotional manipulation, so let’s translate it. When it says: Facebook’s artificial intelligence robots shut down after they start talking to each other in their own language, it actually means:

Now, that’s an accurate title. This said, I’m guessing fewer folks would have clicked on that article, so here we are.